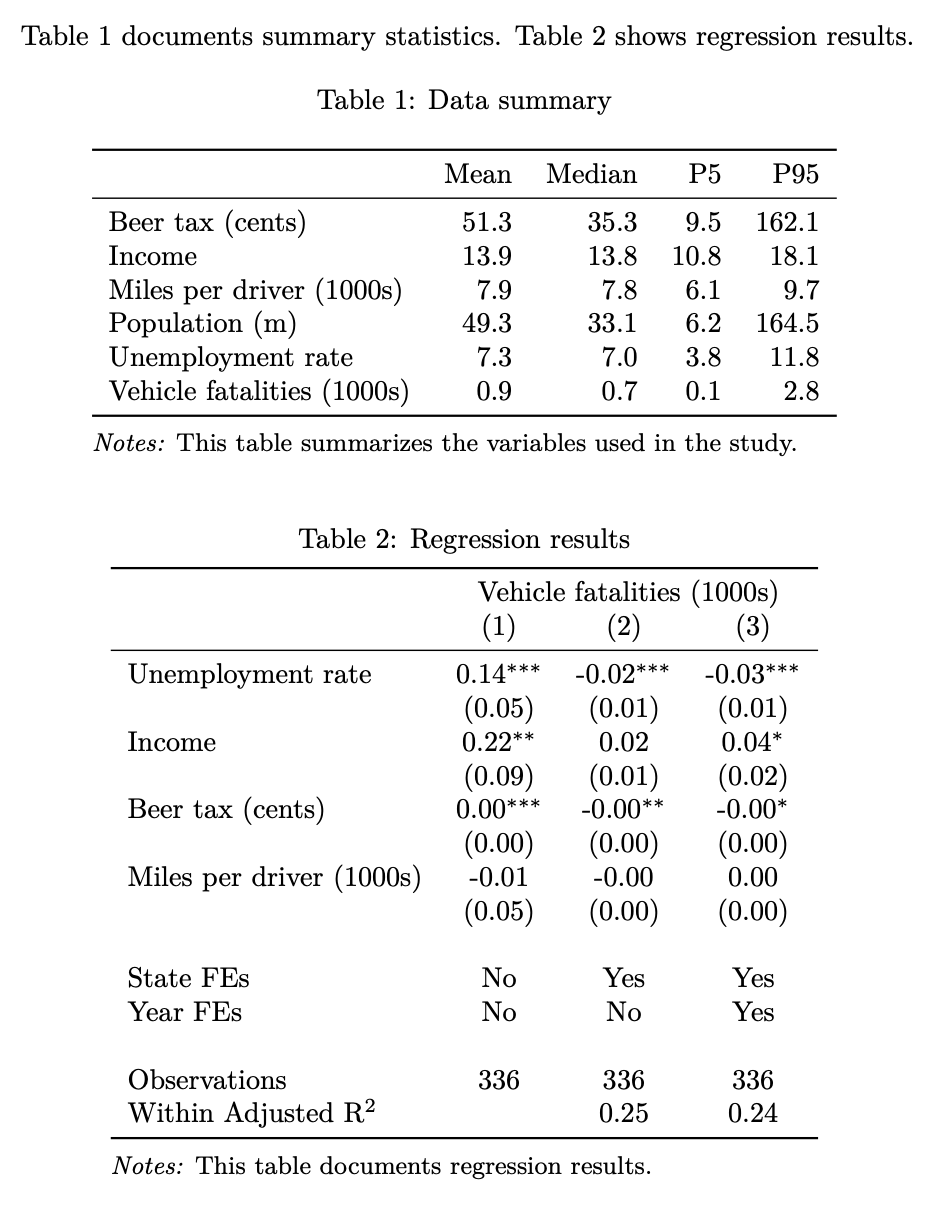

Rows: 336

Columns: 34

$ state <fct> al, al, al, al, al, al, al, az, az, az, az, az, az, az, a…

$ year <fct> 1982, 1983, 1984, 1985, 1986, 1987, 1988, 1982, 1983, 198…

$ spirits <dbl> 1.37, 1.36, 1.32, 1.28, 1.23, 1.18, 1.17, 1.97, 1.90, 2.1…

$ unemp <dbl> 14.4, 13.7, 11.1, 8.9, 9.8, 7.8, 7.2, 9.9, 9.1, 5.0, 6.5,…

$ income <dbl> 10544.15, 10732.80, 11108.79, 11332.63, 11661.51, 11944.0…

$ emppop <dbl> 50.69204, 52.14703, 54.16809, 55.27114, 56.51450, 57.5098…

$ beertax <dbl> 1.53937948, 1.78899074, 1.71428561, 1.65254235, 1.6099070…

$ baptist <dbl> 30.3557, 30.3336, 30.3115, 30.2895, 30.2674, 30.2453, 30.…

$ mormon <dbl> 0.32829, 0.34341, 0.35924, 0.37579, 0.39311, 0.41123, 0.4…

$ drinkage <dbl> 19.00, 19.00, 19.00, 19.67, 21.00, 21.00, 21.00, 19.00, 1…

$ dry <dbl> 25.0063, 22.9942, 24.0426, 23.6339, 23.4647, 23.7924, 23.…

$ youngdrivers <dbl> 0.211572, 0.210768, 0.211484, 0.211140, 0.213400, 0.21552…

$ miles <dbl> 7233.887, 7836.348, 8262.990, 8726.917, 8952.854, 9166.30…

$ breath <fct> no, no, no, no, no, no, no, no, no, no, no, no, no, no, n…

$ jail <fct> no, no, no, no, no, no, no, yes, yes, yes, yes, yes, yes,…

$ service <fct> no, no, no, no, no, no, no, yes, yes, yes, yes, yes, yes,…

$ fatal <int> 839, 930, 932, 882, 1081, 1110, 1023, 724, 675, 869, 893,…

$ nfatal <int> 146, 154, 165, 146, 172, 181, 139, 131, 112, 149, 150, 17…

$ sfatal <int> 99, 98, 94, 98, 119, 114, 89, 76, 60, 81, 75, 85, 87, 67,…

$ fatal1517 <int> 53, 71, 49, 66, 82, 94, 66, 40, 40, 51, 48, 72, 50, 54, 3…

$ nfatal1517 <int> 9, 8, 7, 9, 10, 11, 8, 7, 7, 8, 11, 19, 16, 14, 5, 2, 2, …

$ fatal1820 <int> 99, 108, 103, 100, 120, 127, 105, 81, 83, 118, 100, 104, …

$ nfatal1820 <int> 34, 26, 25, 23, 23, 31, 24, 16, 19, 34, 26, 30, 25, 14, 2…

$ fatal2124 <int> 120, 124, 118, 114, 119, 138, 123, 96, 80, 123, 121, 130,…

$ nfatal2124 <int> 32, 35, 34, 45, 29, 30, 25, 36, 17, 33, 30, 25, 34, 31, 1…

$ afatal <dbl> 309.438, 341.834, 304.872, 276.742, 360.716, 368.421, 298…

$ pop <dbl> 3942002, 3960008, 3988992, 4021008, 4049994, 4082999, 410…

$ pop1517 <dbl> 208999.6, 202000.1, 197000.0, 194999.7, 203999.9, 204999.…

$ pop1820 <dbl> 221553.4, 219125.5, 216724.1, 214349.0, 212000.0, 208998.…

$ pop2124 <dbl> 290000.1, 290000.2, 288000.2, 284000.3, 263000.3, 258999.…

$ milestot <dbl> 28516, 31032, 32961, 35091, 36259, 37426, 39684, 19729, 1…

$ unempus <dbl> 9.7, 9.6, 7.5, 7.2, 7.0, 6.2, 5.5, 9.7, 9.6, 7.5, 7.2, 7.…

$ emppopus <dbl> 57.8, 57.9, 59.5, 60.1, 60.7, 61.5, 62.3, 57.8, 57.9, 59.…

$ gsp <dbl> -0.022124760, 0.046558253, 0.062797837, 0.027489973, 0.03…